728x90

반응형

- 노드포트(NodePort)

- 인그레스(Ingress)

# 노드포스(NodePort) 생성

# 마스터 노드 및 워크노드 정보

# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

m-k8s Ready master 3d5h v1.18.4 192.168.56.10 <none> CentOS Linux 7 (Core) 3.10.0-1160.90.1.el7.x86_64 docker://18.9.9

w1-k8s Ready <none> 3d4h v1.18.4 192.168.56.101 <none> CentOS Linux 7 (Core) 3.10.0-1160.90.1.el7.x86_64 docker://18.9.9

w2-k8s Ready <none> 3d4h v1.18.4 192.168.56.102 <none> CentOS Linux 7 (Core) 3.10.0-1160.90.1.el7.x86_64 docker://18.9.9

w3-k8s Ready <none> 3d4h v1.18.4 192.168.56.103 <none> CentOS Linux 7 (Core) 3.10.0-1160.90.1.el7.x86_64 docker://18.9.9

# 파드 오브젝트 스펙 노드포트 서비스

# cat nodeport.yaml

apiVersion: v1

kind: Service # kind : Service

metadata: # Metadata 서비스의 이름

name: np-svc

spec: # Spec 셀렉터의 레이블 지정

selector:

app: np-pods

ports: # 사용할 프토토콜과 포트들을 지정

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 30000

type: NodePort # 서비스 타입을 설정

# 파드 생성

# kubectl create deployment np-pods --image=sysnet4admin/echo-hname

deployment.apps/np-pods created

# 파드 노드포트 서비스 생성

# kubectl create -f nodeport.yaml

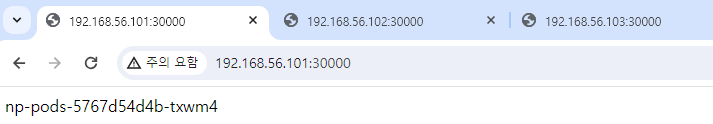

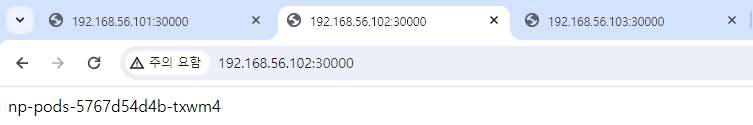

# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

np-pods-5767d54d4b-txwm4 1/1 Running 0 10m 172.16.103.169 w2-k8s <none> <none>

# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

np-svc NodePort 10.104.110.226 <none> 80:30000/TCP 7m32s

# 인그레스(Ingress) 생성

# cat ingress-config.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path:

backend:

serviceName: hname-svc-default

servicePort: 80

- path: /ip

backend:

serviceName: ip-svc

servicePort: 80

- path: /your-directory

backend:

serviceName: your-svc

servicePort: 80

# cat ingress-nginx.yaml

# All of sources From https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/mandatory.yaml

# clone from above to sysnet4admin

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

# cat ingress.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 30100

- name: https

protocol: TCP

port: 443

targetPort: 443

nodePort: 30101

selector:

app.kubernetes.io/name: ingress-nginx

type: NodePort

# kubectl create deployment in-hname-pod --image=sysnet4admin/echo-hname

deployment.apps/in-hname-pod created

# kubectl create deployment in-ip-pod --image=sysnet4admin/echo-ip

deployment.apps/in-ip-pod created

# kubectl apply -f ingress-nginx.yaml

namespace/ingress-nginx created

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.apps/nginx-ingress-controller created

limitrange/ingress-nginx created

# kubectl apply -f ingress-config.yaml

ingress.networking.k8s.io/ingress-nginx configured

# kubectl apply -f ingress.yaml

service/nginx-ingress-controller created

# kubectl expose deployment in-hname-pod --name=hname-svc-default --port=80,443

service/hname-svc-default exposed

# kubectl expose deployment in-ip-pod --name=ip-svc --port=80,443

service/ip-svc exposed# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

nginx-ingress-controller-5bb8fb4bb6-mnx88 1/1 Running 0 23s

# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-nginx <none> * 80 40m

# kubectl get services -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-ingress-controller NodePort 10.101.20.235 <none> 80:30100/TCP,443:30101/TCP 20s

# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hname-svc-default ClusterIP 10.97.228.75 <none> 80/TCP,443/TCP 18s

ip-svc ClusterIP 10.108.49.235 <none> 80/TCP,443/TCP 9s

# kubectl get pods

NAME READY STATUS RESTARTS AGE

in-hname-pod-8565c86448-d8q9h 1/1 Running 0 2m40s

in-ip-pod-76bf6989d-j7pdk 1/1 Running 0 2m30s

728x90

반응형

LIST

'kubernetes' 카테고리의 다른 글

| Kubernetes 설명 (1) | 2024.02.12 |

|---|---|

| Prometheus 설명 (0) | 2024.02.12 |

| centos7에서 docker 재설치(missing signature key) (0) | 2024.02.04 |